By Gordon Rugg

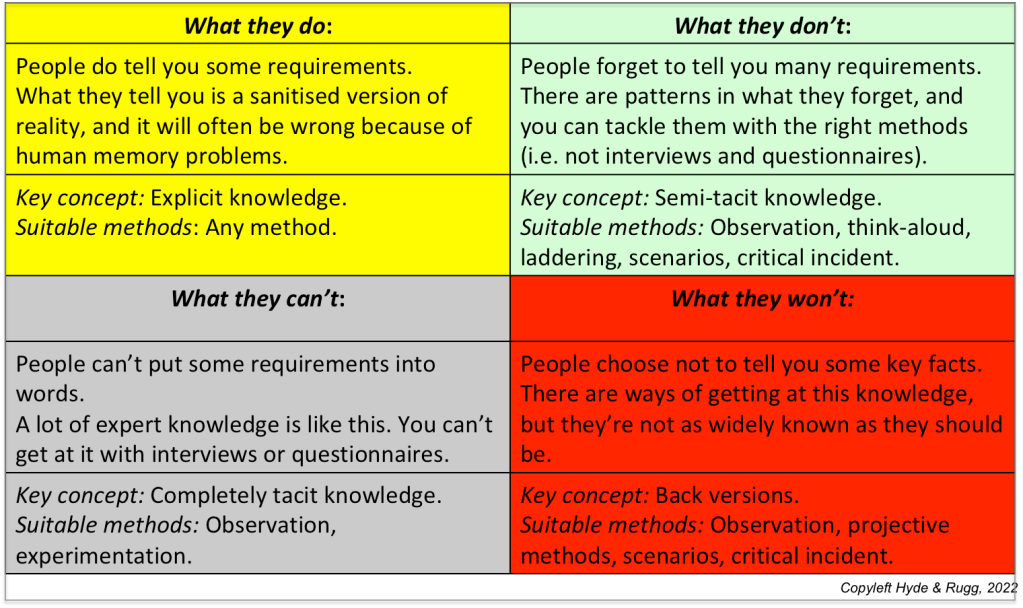

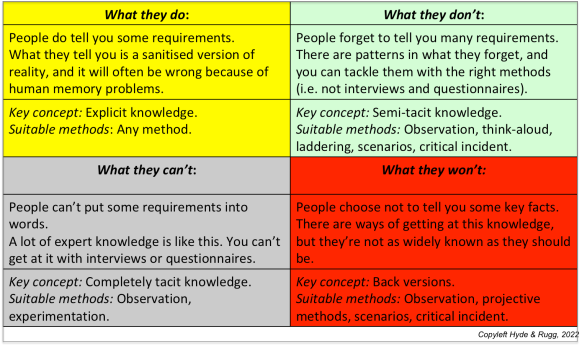

A recurrent theme in our blog articles is the distinction between explicit knowledge, semi-tacit knowledge and tacit knowledge. Another recurrent theme is human error, in various forms. In this article, we’ll look at how these two themes interact with each other, and at the implications for assessing whether or not someone is actually making an error. We’ll also re-examine traditional logic, and judgement and decision-making, and see how they make a different kind of sense in light of types of knowledge and mental processing. We’ll start with the different types of knowledge.

Explicit knowledge is fairly straightforward; it involves topics such as what today’s date is, or what the capital of France is, or what Batman’s sidekick is called. Semi-tacit knowledge is knowledge that you can access, but that doesn’t always come to mind when needed, for various reasons; for instance, when a name is on the tip of your tongue, and you can’t quite recall it, and then suddenly it pops into your head days later when you’re thinking about something else. Tacit knowledge in the strict sense is knowledge that you have in your head, but that you can’t access regardless of how hard you try; for instance, knowledge about most of the grammatical rules of your own language, where you can clearly use those rules at native-speaker proficiency level, but you can’t explicitly say what those rules are. Within each of these three types, there are several sub-types, which we’ve discussed elsewhere.

So why is it that we don’t know what’s going on in our own heads, and does it relate to the problems that human beings have when they try to make logical, rational decisions? This takes us into the mechanisms that the brain uses to tackle different types of task, and into the implications for how people do or should behave, and the implications for assessing human rationality.